Safety Culture

Manoj Patankar, MBA, PhD, FRAeS, Saint Louis University, St. Louis, MO

James Duncan, MD, PhD, Mallinckrodt Institute of Radiology, St. Louis, MO

Updated July 2018 | Download PDF

Culture Is a Key Driver of Radiation Safety

Since culture is defined in many different ways, it is often viewed as an ethereal topic. Still, the importance of culture is known, as most agree, that “culture eats strategy for lunch.” For the purpose of radiation safety during imaging procedures, safety culture can be described as a basic mindset or the way employees behave when they believe no one is watching. However, a more specific definition is needed since improving radiation safety will require individuals and organizations to repeatedly assess and improve their safety culture.

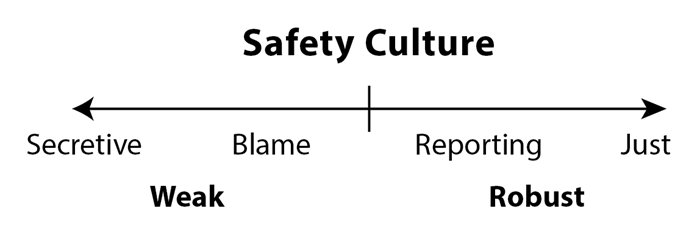

Attempts to measure the safety culture should examine the organization’s attitudes, behaviors and available evidence to assess the patient safety culture in general and radiation safety culture in particular. Individual components can be scored and these scores combined to yield an overall assessment that ranges between weak-robust or secretive-just.

Figure 1: Safety culture scale

Table 1: The Safety culture perspective

| Safety culture perspective[1] | Attributes of weak safety culture (secretive or blaming culture) | Attributes of robust safety culture (learning or just culture) | ||

| Beliefs | “Radiation safety is someone else’s* responsibility” where “someone else” usually refers to a medical physicist, physician, technologist, radiation safety officer, etc. | “Radiation safety is everyone’s responsibility” | ||

| Attitudes | “Years ago, a committee created policies and procedures to satisfy the requirements of The Joint Commission and other regulators” | “We continually update our policies and procedures because we want to be the best at getting better” [2] | ||

| Values | “Complications are an inevitable consequence of performing fluoroscopic procedures” | “It is not okay for me to work in a place where it is okay for people to get hurt” [3] | ||

| Stories and legends | Stories of dramatic rescues without delineating the preceding failures | Legends include near misses such as NASA’s gold plated bolt [4] | ||

| Safety equipment | Used when convenient | Used reliably | ||

| Event reporting system | “Why report events? Such reports just open the door for legal or disciplinary action.” | “We report events and near misses. We then review them and learn from them. How else can we improve?” | ||

| Knowledge of results | Data on radiation safety metrics is difficult to locate, infrequently updated and rarely discussed in group or hospital-wide meetings | Data on radiation safety is readily available, regularly updated and widely disseminated in group and hospital-wide meetings{' '} |

Changing an Organization's Culture

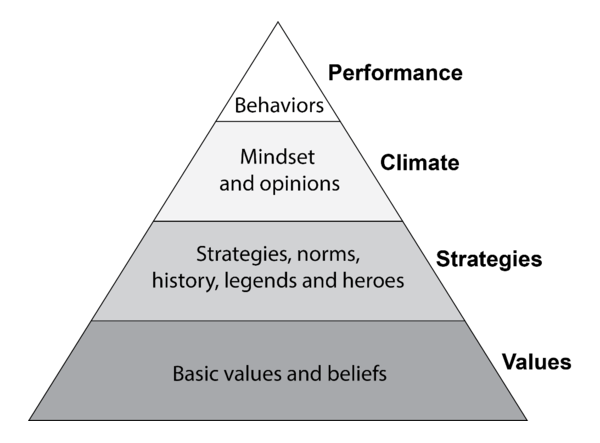

Before we consider changing an organization’s culture, we must first understand the present culture. Safety Culture can be viewed as a collective manifestation of a pyramid structure [6]: values and unquestioned assumptions at the base of the pyramid, followed by organizational structures, strategies, leadership, policies and procedures; then the prevailing safety climate which is manifested in terms of attitudes and opinions of the members of the organization; and finally the safety performance, behaviors or outcomes.

Often times, we tend to focus on poor outcomes, investigate their causes and strive to prevent them in the future — this approach is reactive at best and does not necessary impact the overall safety culture of the organization.

Figure 2: Safety culture pyramid

Working from the base of this pyramid, one should examine the stated (espoused) and practiced (enacted) organizational values [7] — are safety and continuous improvement organizational values? Where and how do they manifest themselves? If they are not explicitly stated, they must be stated. Next, one must examine the organizational structures, policies, procedures and practices — how does the system support the value of safety? What specific organizational structures, policies, procedures, etc., protect the patients and enable the medical community to continuously improve? How do we handle genuine mistakes versus recklessness? How do we track our improvements in safety and quality? What behaviors do we reward, and what behaviors do we penalize?

Next, we have the safety climate, which could be assessed using a number of survey tools. These tools provide a snapshot of the extant attitudes and opinions regarding safety; unfortunately, they are often used as proxy for “cultural assessment.”

Finally, one must examine the behaviors — of not just the practitioners actually engaged in imaging procedures, but of all the people in the systems — how do people react when there is an error? Do the consequences of errors or magnitude of harm influence the reaction or do they focus on the underlying pattern of behaviors regardless of the consequence? Is the system’s emphasis on the person that committed the error or on the system that enabled the conditions for error, or both? How does the system handle disclosure of such errors to the patients and their families? Ultimately, true cultural assessment must involve all the elements of the pyramid and their influences on each other.

Once the extant culture is clearly understood, interventions could be developed for each layer of the pyramid. The impulse to focus on quick fixes and simply implement an error reporting system like they have at institution “X” tends to be strong, but in the long run, less likely to be sustainable if nothing else in the pyramid changes. For example, if the incentives to not report are much stronger than the incentives to report, just having a reporting system will not produce any significant or lasting changes. This is where we will prove the adage, “culture eats strategy for lunch” because we never really attempted to change the culture; we just attempted to institute another program or strategy. Further, a cultural change is evolutionary — it takes sustained, collective efforts over a long period of time.

Practical Steps to Transform Safety Culture

- Make safety and continuous improvement organizational values.

- Remove organizational structures, processes and policies that are inconsistent with safety; if necessary replace them with new processes — such as a non-punitive error reporting system — which are consistent with the new organizational value. Sometimes, it may not be necessary to institute a new process, but to simply use the existing process with a different mindset and operational goals. Consider existing institutional incentives — are people incentivized to act in the interest of safety? How could the incentives be realigned to ensure that people do act in the interest of safety — all the time?

- Measure employee attitudes and opinions on an annual basis and compare the patterns across employee groups as well as longitudinally — are there significant differences? Why? Is the overall climate changing in the desired direction? How could the momentum be maintained?

- Focus on minimizing risky behaviors and maximizing learning from every procedure regardless of the outcome; build a set of key behaviors that are set as baseline expectations from radiology professionals; set penalties for reckless behavior and forgiveness for unintentional errors.

Having set safety as an organizational value, one could establish pre- and post-procedure briefing protocols to first establish a margin of safety for the radiation use. Do we have a monitoring strategy in place and choosing protocols that optimize radiation use? Next, after each procedure, the dose metric could be reviewed and used to answer whether we achieved the desired result with an adequate margin of safety? How could we improve our performance? Feed this learning from each procedure to the next procedure. A regular practice of pre-/post-briefing and debriefing will ensure that safety and continuous improvement are enacted as organizational values. Over time, the culture will change.

In the meantime, remember to collect artifacts — policies and procedures; awards, notices of exemplary performance and recognition of good catches that symbolize the new culture; examples of how errors are handled (case studies); briefing and debriefing checklists; etc. — all of them provide evidence of a culture.

An organization’s safety culture can be improved through such planned interventions. For example, establishing a Just Culture will require promoting the mindset that while human errors are inevitable, robust systems are designed to absorb the impact of these very predictable errors. By routinely reporting errors and near misses, the system can be redesigned to minimize the frequency, reduce the severity and detect those errors before they cause harm. Just Culture also means establishing a clear line between acceptable and unacceptable behaviors.

Efforts to change culture must recognize that culture rarely undergoes dramatic shifts. Rather, culture tends to evolve over time. One very tangible means of establishing a reporting culture for radiation safety is to begin using an event reporting system {' '} to capture cases where the observed dose is substantially higher than expected . Such cases are clear learning opportunities and the subsequent investigations should focus on identifying the factors {' '} that caused the large difference between expected and observed. Every effort should be made to avoid “shaming and blaming” the individuals who participated in the procedure. Since unintended errors are frequent occurrences, any improvement that follows the inevitable lectures to “be more careful” or “pay more attention” will likely be modest and short-lived.

References

- Salas E, Jentsch F, Maurino D, Human factors in aviation. Academic Press, 2010. Edition 2, revised. See:{' '} http://books.google.com/books?id=ohjZXuvVTc0C&dq.

- Hanna J, Cincinnati Children’s Hospital Medical Center. Harvard Business School Newsletter, June 28, 2010. Available at:{' '} http://hbswk.hbs.edu/item/6441.html.

- Knowles, RN, The leadership dance; pathways to extraordinary organizational effectiveness. Center for Self-Organizing Leadership, 2002. See:{' '} http://books.google.com/books?id=5pwBAAAACAAJ&dq

- Berwick D, Improving Patient Safety. Harvard Health Policy Review, 2000, 1(1). Available at:{' '} http://www.hcs.harvard.edu/~epihc/currentissue/fall2000/berwick3.html.

- AHRQ Hospital survey on patient safety culture. Agency for Healthcare Research and Quality, US Department of Health and Human Services. Available at:{' '} http://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/index.html . Accessed September 25, 2014.

- Patankar MS, Sabin EJ, Bigda-Peyton, Brown JP, Safety culture: building and sustaining a cultural change in aviation and healthcare. Ashgate Publishing, Ltd, 2012. See:{' '} http://books.google.com/books?id=wVylT9W_6PAC&dq.

- Schein EH, Organizational culture and leadership. John Wiley & Sons, 2010. See:{' '} http://books.google.com/books?id=DlGhlT34jCUC&dq .